Apparently, our recent articles have caused a bit of a stir. It’s been gratifying to see so many folks commenting and weighing in on what we have planned, and the metaverse in general.. One thing that’s really struck me is the enthusiasm for the reinvention of online world technology. Whether a particular commenter is focused on decentralization, player ownership, or user creativity, there’s clearly a lot of interest in new ways of doing things.

In my experience, whenever we are exploring new ways to approach old concepts, it’s important to look backwards at the ways things have been done before. A lot of these dreams aren’t new, after all. They’ve been around since the early days of online worlds. So why is it that some of them, such as decentralization, haven’t come to pass already?

The answer lies in the nitty gritty details of actual implementation. A lot of big dreams crash and burn when they meet reality – and some of our most cherished hopes for virtual worlds have pretty big technical barriers.

That’s why I am writing about how virtual worlds work today, so it’s easier to have these conversations, and all reach for those dreams together. And I’m going to try to do it with minimal technical jargon, so everyone can follow along.

This is a big topic, so today we’ll confine ourselves to just one part of the virtual world picture: what you, as the user, see.

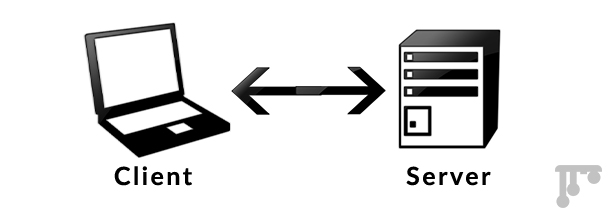

Clients over here, servers over there

Let’s start with the simplest picture that most people have of a virtual world. You have a client over here, running on a local computer of some sort. And somewhere out in the Internet, there is a server.

This picture is deeply misleading. It gives the impression there’s a nice clean divide between these two things, when that isn’t the case at all. Everything is always more complicated than you think.

You would think that a client simply displays whatever’s happening on the server side. And once upon a time, that was true: back in the text days, the server actually sent the text over the wire, and the client just plastered it on your screen. It’s similar to Stadia or Netflix today: the server sends pixels over the wire, and the client just plasters them on your screen.

But virtual worlds haven’t worked that way for a long time now.

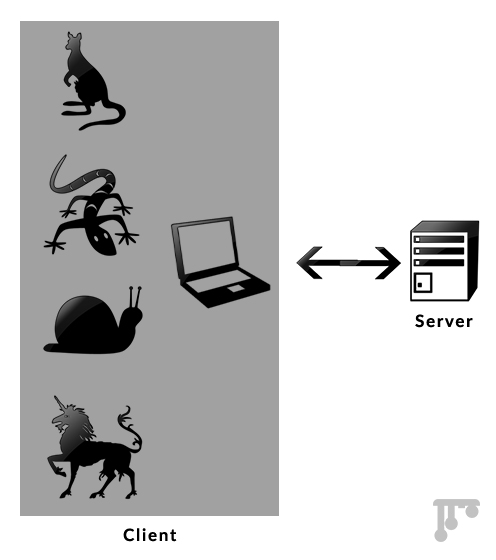

Instead, when you get a game client, it actually comes with all the art. The client is just told which piece of art to draw where. This dates back to when we couldn’t send art over the wire because it was just too big. (Of course, these days, you have, on average, around 2500 times more bandwidth than we did back when we made Ultima Online.)

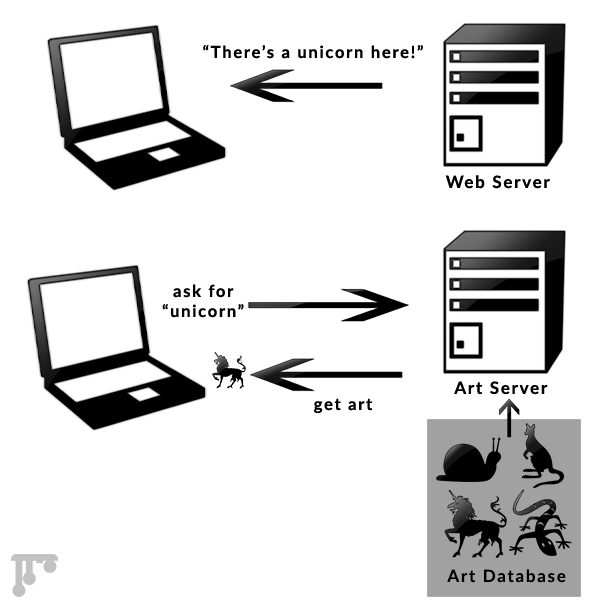

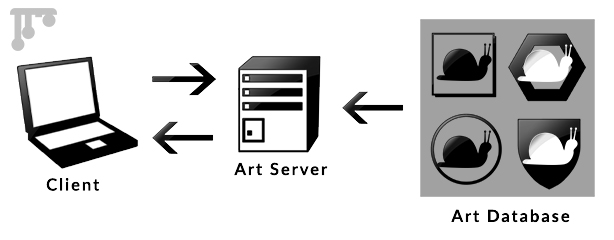

So let’s update the earlier diagram. It’s really more like this:

But it doesn’t have to work that way. Pieces of art are all just data. And something we should bear in mind as we keep going through this article (and the upcoming ones in this series), is that you can always pull data out of a box in the diagram, and once you do, it can live somewhere else.

For example, when you connect to a webpage, the art data needed to draw logos and photos and background images isn’t baked into your browser. It’s on a server too. The client gets told “draw this item!” and instead of loading it off your local hard drive, it loads it over the wire (and saves a local copy so it’s faster next time).

But — and this is a big but — this only works for the web because we have some technological standards. Every browser knows how to load a .jpg, a .gif, a .png, and more. The formats the data exists in are agreed upon. If you point a browser at some data in a format it doesn’t understand, it’s going to fail to load and draw the image, just like you can’t expect Instagram to know how to display an .stl meant for 3d printing.

This is a crucial concept, which is going to come up again and again in these articles: data doesn’t exist in isolation. A vinyl record and a CD might both have the same music on them, but a record player can’t handle a CD and a vinyl record doesn’t fit into the slot on a CD player (don’t try, you will regret it).

Anytime you see data, you need to think of three things: the actual content, the format it is in, and the “machine” that can recognize that format. You can think of the format as the “rules” the data needs to follow in order for the machine to read it.

The thing about formats is that they need to be standardized. They’re agreed upon by committees, usually. And committees are slow and political… and of course, different members might have very different opinions on what needs to be in the standard – and for good reasons!

One of the common daydreams for metaverses is that a player should be able to take their avatar from one world to another. But… what format avatar? A Nintendo Mii and a Facebook profile picture and an EVE Online character and a Final Fantasy XIV character don’t just look different. They are different. FFXIV and World of Warcraft are fairly similar games in a lot of ways, but the list of equipment slots, possible customizations, and so on are hugely different. These games cannot load each other’s characters because they do not agree on what a character is.

Moving avatars between worlds is actually one of the hardest metaverse problems, and we are nowhere near a solution for it at all, despite what you see on screen in Ready Player One.

There have been attempts to make standard formats for metaverses before. I’ve been in meetings where a consortium of different companies tried to settle on formats for the concept of “an avatar.” Those meetings devolved into squabbles after less than five minutes, no joke.

But even simple problems turn out to be hard – like, say, “a cube.”

VRML and its successor X3D were attempts at defining formats for 3d art for metaverses. Games tend to use different specific 3d art formats. 3d printers use other ones. These days, 3d assets by themselves – just the formats for “a cube” – have narrowed down to a relatively small set of common formats. By which I mean “only” hundreds of them.

One of the things that is intrinsic to the dream of a metaverse is democratizing and decentralizing everything. But there’s a harsh reality shown to us by the web: the more “formats” you need your “machine” to be able to “play,” the harder and more expensive the “machine” is to make.

And that means only groups that are already rich tend to make “machines.”

That’s why we only have a few web rendering engines, and they’re all made by giant megacorps. Under the hood, even Microsoft Edge is actually Google Chrome, and every single browser on your iPhone is actually Safari.

This has substantial implications for a democratized metaverse. It’s pretty clear, for example, that Epic is trying to make the Unreal Engine into one of the few winners — it’s part of why they are not just an engine company, but also buying 3d art libraries.

So can we just pick a couple of formats and stick with them?

Part of the reason why different formats are used is because subtle differences are useful for different use cases. Game engines today favor efficiency. Because of this, they can import a wide range of common 3d file formats from 3d modeling software. The engines then convert them internally into another format, often compressing, combining, and in general optimizing the data. That conversion process (Unreal calls it “cooking”) is not fast. It’s not real-time. In fact, it can take hours. And the result, by the way, is not compatible between engines.

Needless to say, if you are trying to make a real-time experience, you can’t realistically fetch data from a remote site in a more generic format and end up having to cook it on the client side before loading it. Everything would screech to a halt.

All that just to draw a cube. And we’ve already run into very serious implications for the metaverse dreams of democratizing content creation, preventing monopolization or corporate dominance of the metaverse “browser” market, and enabling interoperability between worlds.

You would think that the other parts of a virtual world might be simpler than this problem. I’m afraid not. A cube, with all the above complexities, is actually the simplest part of the entire equation.

Everything else is much, much harder. And we’ll talk about that next week.

Check out the other articles in the series

How Virtual Worlds Work – Part 1

How Virtual Worlds Work – Part 2

How Virtual Worlds Work – Part 3

How Virtual Worlds Work – Part 4

How Virtual Worlds Work – Part 5